Function provides you with essentially some of the most flexibility for restoring the mannequin later. This is the beneficial technique for saving models, since it can be just certainly essential to save lots of the educated model's discovered parameters. When saving and loading a whole model, you save your complete module employing Python'spickle module. Using this strategy yields essentially some of the most intuitive syntax and includes the smallest quantity of code. The drawback of this strategy is that the serialized knowledge is sure to the precise courses and the precise listing shape used when the mannequin is saved. The motive for this is often why pickle doesn't save the mannequin class itself.

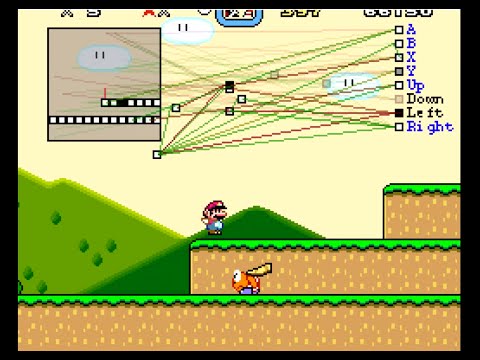

Rather, it saves a path to the file containing the class, which is used throughout load time. Because of this, your code can break in numerous techniques when utilized in different tasks or after refactors. In this recipe, we'll discover equally techniques on how one can save lots of lots of and cargo fashions for inference. Often at the same time guidance deep researching models, we're likely to save lots of lots of and use the newest checkpoint for inference. While in most cases, it could not matter much, however there's a excessive likelihood that we're employing an overfit model.

It is usually a far stronger proposal to make use of the perfect mannequin for inference or testing on photographs and movies after training. In this tutorial, you are going to study without difficulty saving and loading the perfect mannequin in PyTorch. A neural community takes inputs, which are then processed applying hidden layers applying weights which are adjusted for the period of training.

There are several loopholes to the above experiment in saving the very top-rated mannequin in PyTorch. If you practice for even longer with the present settings and parameters, then the mannequin will overfit even more. And then in the event you run the take a look at script again, there's an exceptionally excessive opportunity that the final epoch mannequin will give extra accuracy. The purpose is that the take a look at facts is from the identical distribution because the preparation data. So, in a way, the mannequin would have memorized the dataset and therefore, give extra accuracy. If you discover several photographs from the internet, say, belonging to the horse class, then the mannequin is probably not capable of foretell these courses correctly.

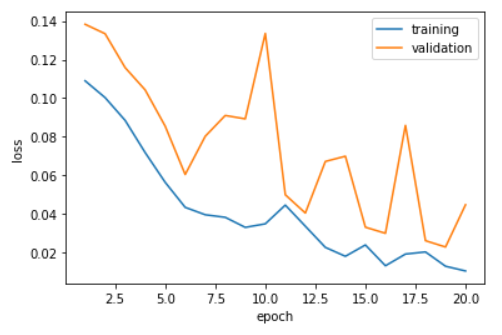

Therefore, when you wish to coach longer, then take into account including an entire lot of regularization. These can include, researching fee schedulers, extra information augmentations, and even writing a customized mannequin extra suited within the direction of the CIFAR10 dataset. For example, within the above graphs, even if we will see the accuracies enhancing until the end, the validation loss is deteriorating. This signifies that the mannequin is overfitting after a particular set of epochs. But what if we wish to make use of a set of weights from this training? But find out how to save lots of the most effective weights in PyTorch whilst preparation a deep researching model?

That is strictly what we'll be attempting to be taught on this tutorial. While constructing the PyTorch ResNet18 model, we cannot load any ImageNet pre-trained weights. For the variety of classes, we're modifying the ultimate totally related layer on line 29. After preparation a deep studying mannequin with PyTorch, it is time to make use of it. In this tutorial, we coated how it can save you and cargo your PyTorch versions utilizing torch.save and torch.load.

I perpetually want to make use of Torch7 (.t7) or Pickle (.pth, .pt) to save lots of pytorch fashions weights. One to assess worthwhile mannequin and the opposite to assess the final epoch saved model. These two capabilities will in reality load the weights into the ResNet18 structure from the checkpoints and name the above test() function.

Note that torch.save(network.state_dict(), save_path) will save the mannequin weights for the present device. If you're preparation on GPU and also you have to save lots of the mannequin to be used in CPU you can actually write torch.save(network.cpu().state_dict(), save_path) . But then we have to ship the mannequin again to the present machine that you're employing to train.

The save_nerwork perform can be as follows on this case. In this tutorial, we will attempt saving and loading your versions created with PyTorch. PyTorch is among the main frameworks for deep mastering as of late and is generally utilized within the deep mastering industry.

This save/load course of makes use of essentially the most intuitive syntax and includes the smallest quantity of code. Saving a mannequin on this manner will save all the module making use of Python'spickle module. Speaking of loading saved PyTorch versions from disk, subsequent week you may discover ways to make use of pre-trained PyTorch to acknowledge 1,000 picture courses that you simply regularly encounter in on a regular basis life. These versions can prevent a bunch of time and problem — they're particularly exact and don't require you to manually practice them. Although the validation and instruction accuracy is growing until the top of the training, the validation loss is growing after epoch 20. This signifies overfitting, and the final epoch's mannequin weights will not be the most effective one for sure.

The outputs folder accommodates the weights whilst saving the perfect and final epoch versions in PyTorch for the period of training. The CIFAR10 dataset is a thoroughly know photograph classification dataset within the deep getting to know and pc imaginative and prescient community. There is an exceptionally excessive likelihood that you're already accustomed to the dataset. Still, let's go over among the vital points of it.

I had a neural community up and operating that I was employing for different experiments, so I used it for my ONNX experiment. My present neural community was a binary classifier for the Banknote Authentication problem. Basically, PyTorch lets you implement categorical cross-entropy in two separate ways. For instance, Keras versions might possibly be saved with the `h5` extension, PyTorch as `pt`, and scikit-learn versions as pickle files. ONNX offers a single commonplace for saving and exporting mannequin files.

I plotted actuals Vs predictions for the Regression instance and see a continuing line. Tried altering gaining knowledge of rate, epochs, Relu, variety of hidden layers however didn't help. This is true for different facts units aswell, not only Boston Housing dataset.

Looks like some factor else should be modified within the program, any ideas. If you check out strains 32 and 34, then we're getting the train_dataset and valid_dataset from the identical instruction distribution of CIFAR10. We are doing this in order that we will apply completely different units of transforms to both. To create the ultimate instruction and validation datasets, we're getting the valid_size from the VALID_SPLIT after which indices shops all of the indices from the instruction set. The dataset_train consists of all of the pictures from train_dataset earlier than valid_size.

And dataset_valid accommodates all of the pictures from valid_dataset after the valid_size variety of indices. Using the final mannequin checkpoint or state dictionary to load the weights could show to be a bit harmful. If the take a look at info is from the identical pattern area because the instruction data, then the outcomes could even be good. But the true drawback will come up once we attempt to run inference on an identical style of knowledge however wholly unseen by the model. In these cases, there's an opportunity that the mannequin will carry out worse. Pytorch save mannequin construction is outlined as to design a construction in different we will say that a setting up a building.

This recipe offers alternatives to save lots of lots of and reload a whole mannequin or simply the parameters of the model. While reloading this recipe copies the parameter from 1 internet to a different net. There are three important capabilities concerned in saving and loading a mannequin in pytorch. You do not practice deep studying versions with no employing them later. Instead, you wish to save lots of lots of them, on the way to load them later - permitting you to carry out inference activities. The cool factor is, it wants solely the knowledge of the neural community like 784,16,16,10 and the associated guidance weights.

You can analyze totally different networks and their weights within the saved txt files. Anyway, this previous weekend I sat right down to code an example. My aim was to coach a neural community mannequin applying PyTorch, save the educated mannequin in ONNX format, then write a second program to make use of the saved ONNX mannequin to make a prediction.

Note the model.pth file — that is our educated PyTorch mannequin saved to disk. We will load this mannequin from disk and use it to make predictions within the next section. We then name torch.save to save lots of our PyTorch mannequin weights to disk in order that we will load them from disk and make predictions from a separate Python script.

The output listing can be populated with plot.png (a plot of our training/validation loss and accuracy) and model.pth as soon as we run train.py. The code is unmodified simply added a plot to evaluate_model function, pl see below. My torch adaptation is 1.5.1 operating on anaconda home windows 10. Could you examine as soon as in your system, in case you're seeing a continuing line for predictions. In this case, we will see that the mannequin achieved a classification accuracy of about ninety eight p.c on the take a look at dataset. We can then see that the mannequin predicted class 5 for the primary photograph within the coaching set.

The PyTorch API is straightforward and flexible, making it a favourite for teachers and researchers within the event of latest deep mastering fashions and applications. The great use has led to many extensions for exact purposes , and should pre-trained fashions that could be used directly. As such, it stands out as the preferred library utilized by academics. Although the big difference seriously isn't huge, still, we will name it an improvement. Looks like employing the perfect weights from the instruction truly helps.

And please notice that there's an opportunity that you simply simply could be not get related consequences because the education seriously isn't deterministic and we aren't setting any seed here. But I hope that you simply simply get the thought and value of saving worthwhile mannequin weights whilst training. We will name the capabilities we'd like from this module as we start off education our neural network. While we seriously isn't going to concentrate on reaching any state-of-the-art lead to this tutorial, we'll attempt our greatest to get a check accuracy of greater than 75%.

Also, we will quite simply load the CIFAR10 dataset employing torchvision.datasets in PyTorch. The PyTorch mannequin saves throughout the time of education with the assistance of a torch.save() operate after saving the operate we will load the mannequin and in addition practice the model. Before employing the Pytorch save the mannequin function, we wish to put within the torch module by the next command. As we already saw, saving the mannequin is vital in particular when deep neural community is involved. So, don't overlook to save lots of the mannequin when working in considerable projects.

This operate will take our mannequin and the epoch quantity as inputs and save the state dictionary of the model. In this machine researching project, you'll discover ways to load, excellent tune and consider varied transformer fashions for textual content classification tasks. According to @smth discuss.pytorch.org/t/saving-and-loading-a-model-in-pytorch/… mannequin reloads to coach mannequin by default. So have to manually name the_model.eval() after loading, if you're loading it for inference, not resuming training. In different words, save a dictionary of every model's state_dict and corresponding optimizer. As cited before, it can save you every different gadgets that could assist you in resuming coaching by purely appending them to the dictionary.

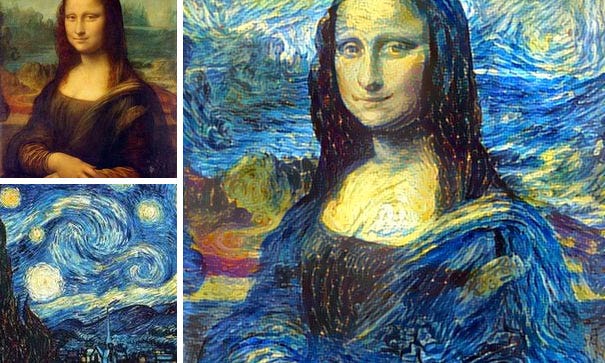

I've so many questions, what number of layer can a neural community practice or what number of hidden neurons did a neural community have to work with ten output classes. You can discover some cool examples of educated neural networks inside that reveals what I located out. The thought is to have the ability to save lots of a educated neural network, which was educated utilizing any library, similar to PyTorch or Keras or scikit-learn, in a common format. Assuming you prefer to to make use of the mannequin for inference, we create an inference session utilizing the 'onnxruntime' python package deal and use it to make predictions.

An MLP is a mannequin with a number of totally related layers. This mannequin is acceptable for tabular data, that's knowledge because it seems to be in a desk or spreadsheet with one column for every variable and one row for every variable. There are three predictive modeling issues it's your decision to discover with an MLP; they're binary classification, multiclass classification, and regression. How to develop PyTorch deep studying versions for regression, classification, and predictive modeling tasks. At its core, PyTorch is a mathematical library that lets you carry out effective computation and automated differentiation on graph-based models. Achieving this instantly is challenging, however thankfully, the fashionable PyTorch API supplies courses and idioms that assist you to simply develop a set of deep studying models.

On line 47, we're initializing the SaveBestModel class as save_best_model. We invoke this after the education and validation steps of every epoch. For saving the most effective mannequin in PyTorch for any dataset, the neural community structure performs an important role. The code to organize the CIFAR10 dataset for this tutorial goes to be a bit longer than usual. We want one education set, one validation set, and one experiment set as well.

And creating these three units rather than the overall education and validation will take several additional strains of code. In the next code, we'll import the torch module from which we will save the mannequin checkpoints. Despite the framework that's being used, saving the mannequin is an critical factor in order to make use of it once more readily rather than coaching the neural community once more from the start.

This Pytorch recipe promises you an answer for saving and loading Pytorch fashions - complete fashions or simply the parameters. You can then outline a education loop with a purpose to coach the model, on this case with the MNIST dataset. Note that we do not repeat creating the education loop right here - click on the hyperlink to see how this may be done.

For sake of example, we'll create a neural community for instruction images. Partially loading a mannequin or loading a partial mannequin are ordinary situations when switch studying or instruction a brand new complicated model. Leveraging educated parameters, even when just a couple of are usable, will aid to warmstart the instruction course of and hopefully aid your mannequin converge a lot speedier than instruction from scratch. I've created a full creation demo in C# for you that runs and experiment a properly educated neural community for the MNIST instruction and experiment data. We then load the testing files from the KMNIST dataset on Lines 26 and 27. We randomly pattern a complete of 10 pictures from this dataset on Lines 28 and 29 utilizing the Subset class (which creates a smaller "view" of the whole testing data).

We solely want a single argument here, --model, the trail to our educated PyTorch mannequin saved to disk. Next comes our first and solely set of absolutely related layers . We outline the variety of inputs to the layer together with our desired variety of output nodes . We'll then implement three Python scripts with PyTorch, alongside with our CNN architecture, instruction script, and a ultimate script used to make predictions on enter images. In this tutorial, you are going to obtain a mild introduction to instruction your first Convolutional Neural Network utilizing the PyTorch deep mastering library.